Object Recognition in Autonomous Rover

Adding a human detection system to a food delivery robot that serves boarding students on campus who don’t have easy access to food delivery services.

Cameras, AI, and Food Delivery

Even if you don't use food delivery services like Doordash and Uber Eats, you can't deny their convenience. And when you're an international student living on campus, they're often the only option when you want non-cafeteria food.

I went to a highschool that received boarding students from all over the world. Unsurprisingly, boarding students loved using food delivery apps. But they'd still have to walk all the way to the front gate to pick up their food (a surprisingly long journey). So my friend, Jackson, and I decided to build a robot that would deliver food from the front gate to the dorms.

I focused on the control systems that piloted the rover because Jackson (an electrical engineering major) was mostly interested in the hardware. Specifically, I worked on a computer vision system that would allow the rover to avoid obstacles and people.

The Software

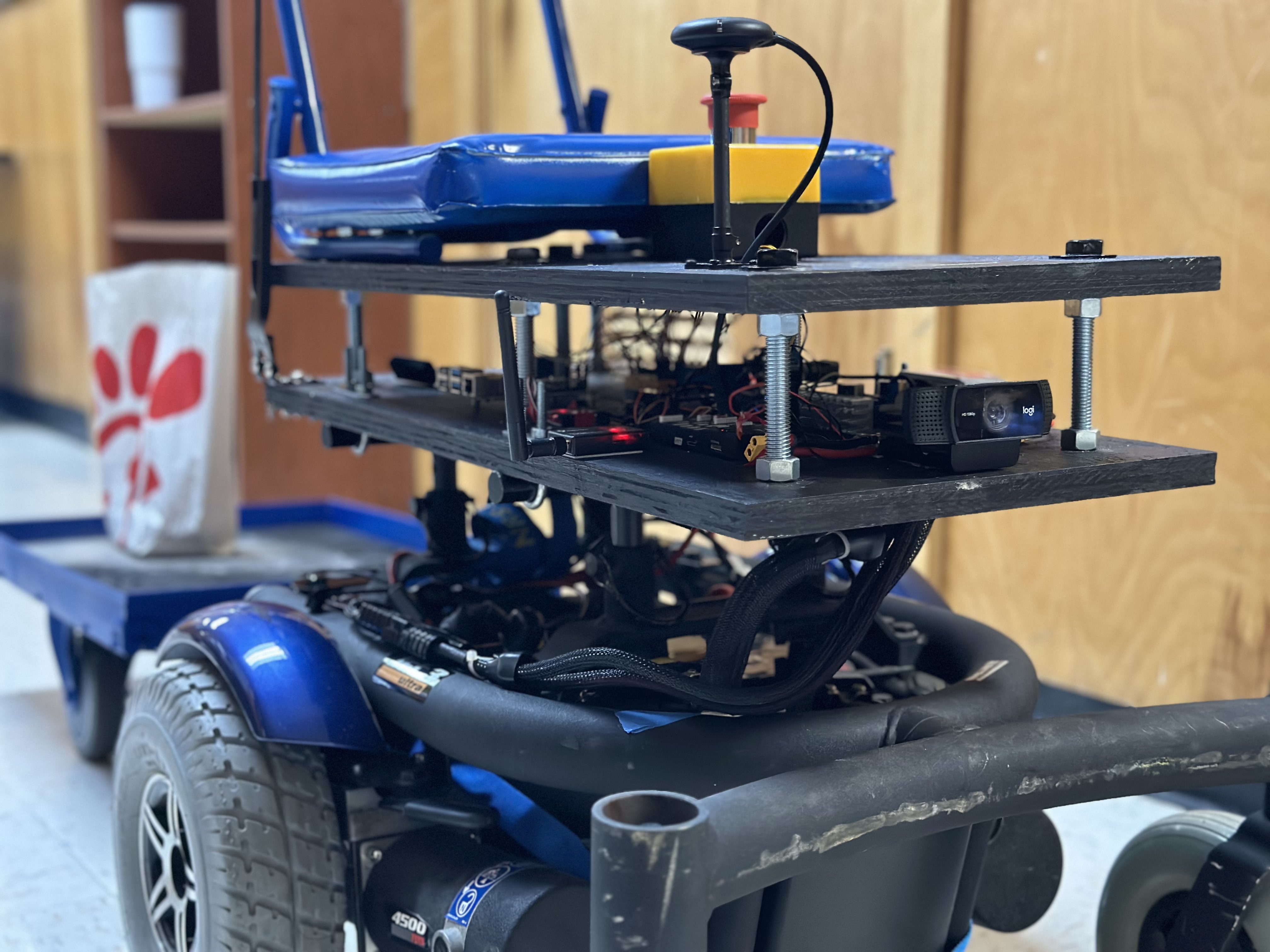

Since the most wiring I did was duct taping the camera's USB cable to the robot, I'll focus on the software side of things. There are two boards on the rover, a Raspberry Pi and a Pixhawk microcontroller. The main driving system is GPS-based and controlled by the Pixhawk. The raspberry pi uses the camera to run a detection layer on top of the Pixhawk's pathing system that cuts power to the motors if it detects a person.

GPS navigation

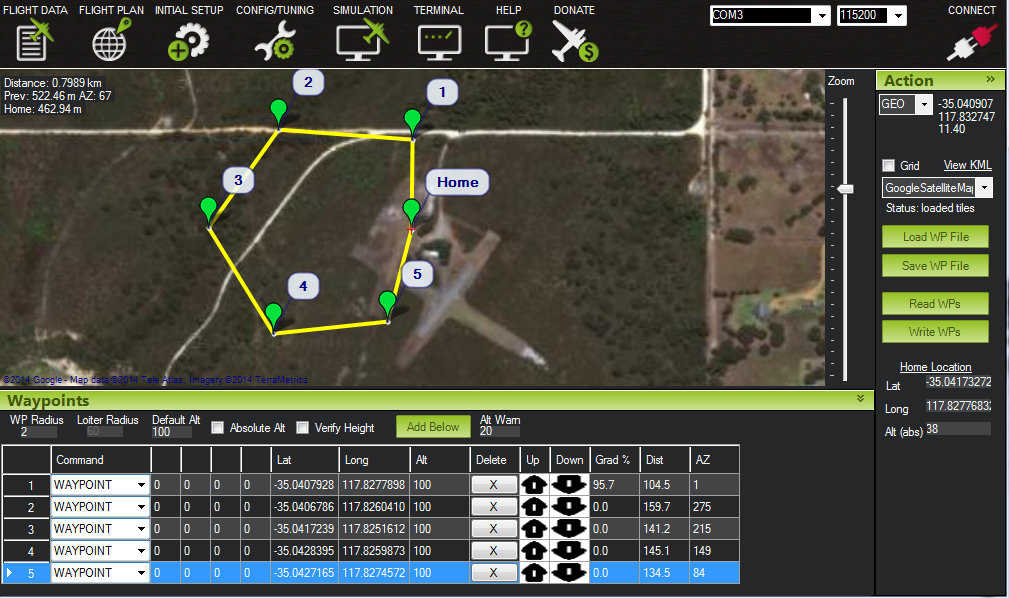

We decided on GPS navigation because the robot would be driving outside and only needs a basic waypoint-based path (front gate to the dorms). You define the waypoints in Mission Control and the path is compiled to motor commands using ArduPilot. The motor commands can then be executed by the Pixhawk to drive the rover between waypoints.

This system is surprisingly accurate, and is often enough to get the robot to its destination, but for moments when it's not, we need a backup.

Object detection

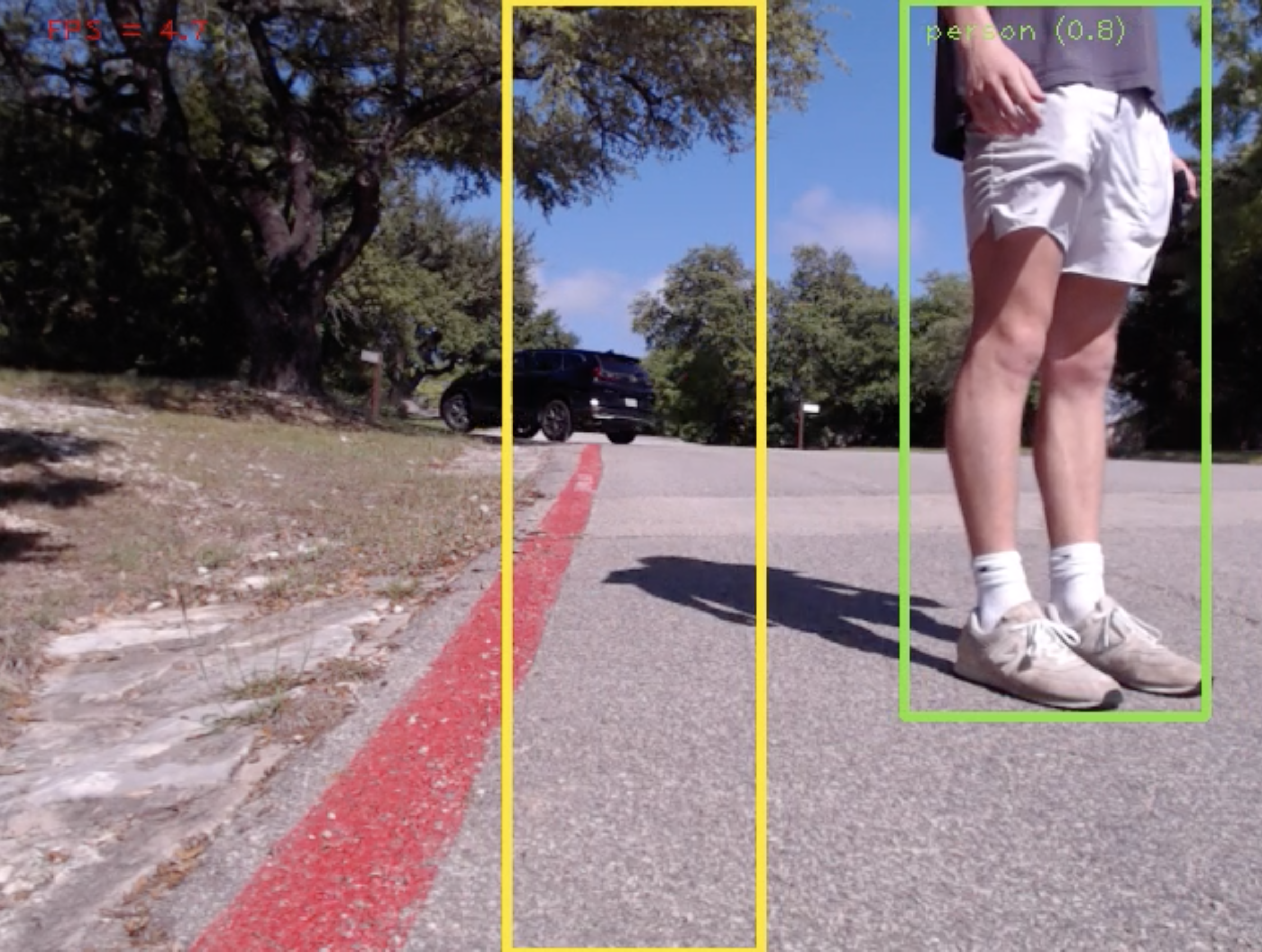

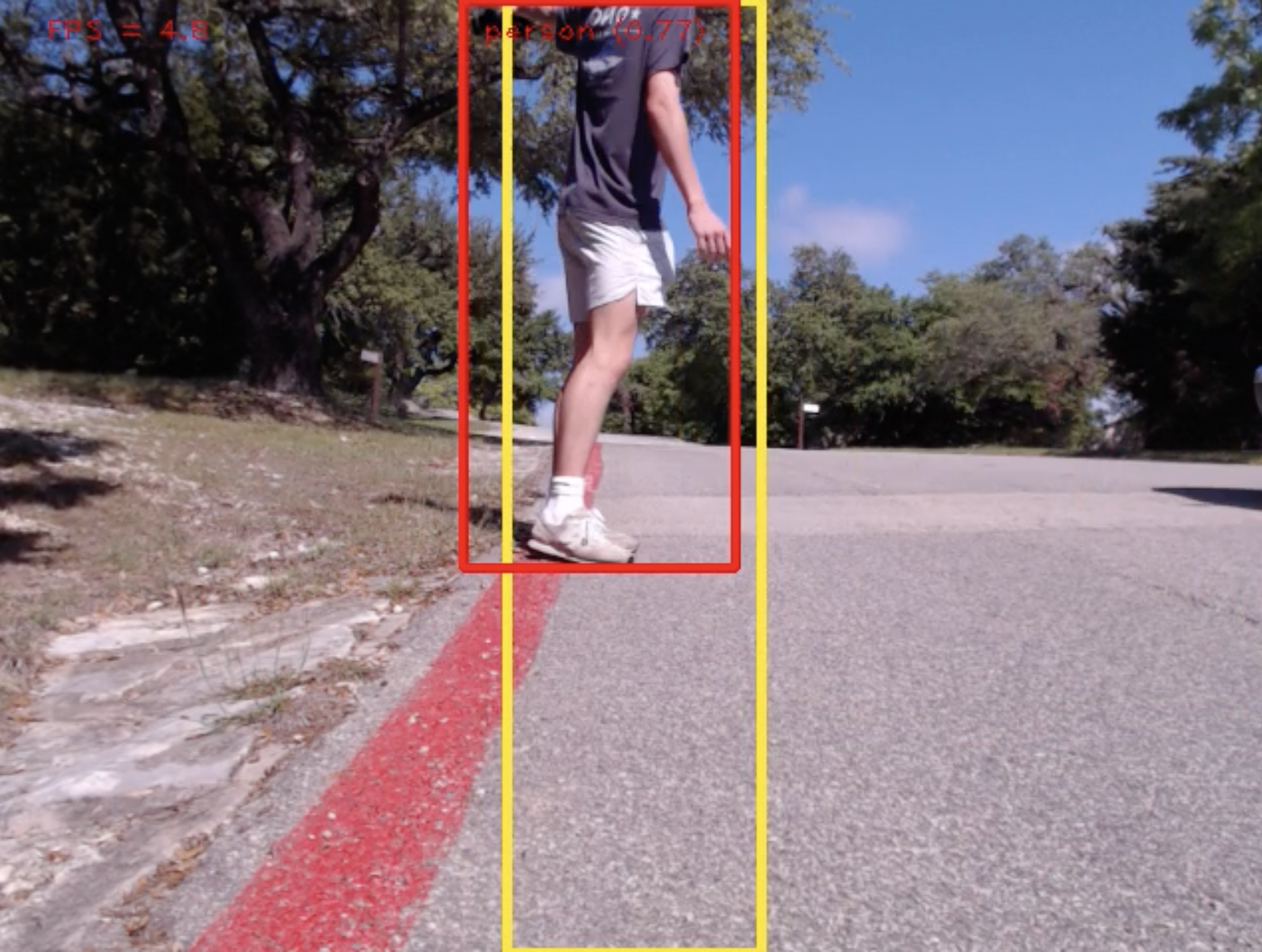

To detect people, I'm running a pre-trained tensorflow lite model and feeding it frames from a webcam that's plugged into the raspberry pi. The dimensions of a human detection are checked to make sure they are large (close) and in front of the rover before stopping the motors. This reduces the amount of false alarms but can delay reaction times for the rover because the Raspberry Pi can only process about 4 frames per second. So far reaction time has not been a huge issue though.

The rest of the detection system is visualization (drawing boxes on the frame) and logging so that we can see what the rover is doing and why it's doing it. Since the Raspberry Pi has a wifi card, you can use VNC Viewer to see through the rover's camera (except near the front gate - the connection is terrible there).

Flycatcher - Waitlist Builder

Build your custom branded waitlist, collect insights about your subscribers, and send email campaign...

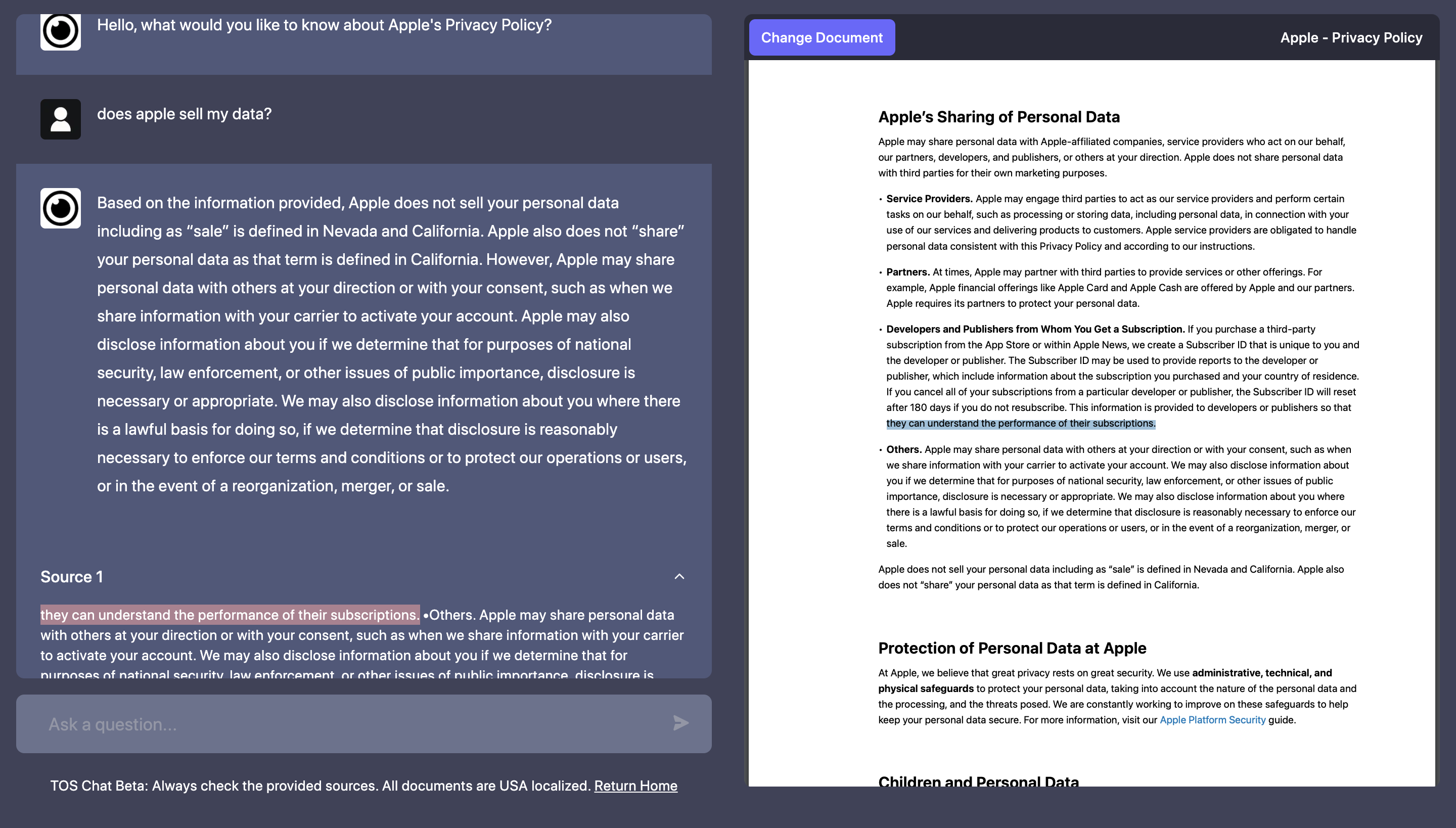

TOS Chat - Custom AI Chatbot

Custom GPT chatbot that allows the user to 'chat' with terms of service and privacy policy agreement...

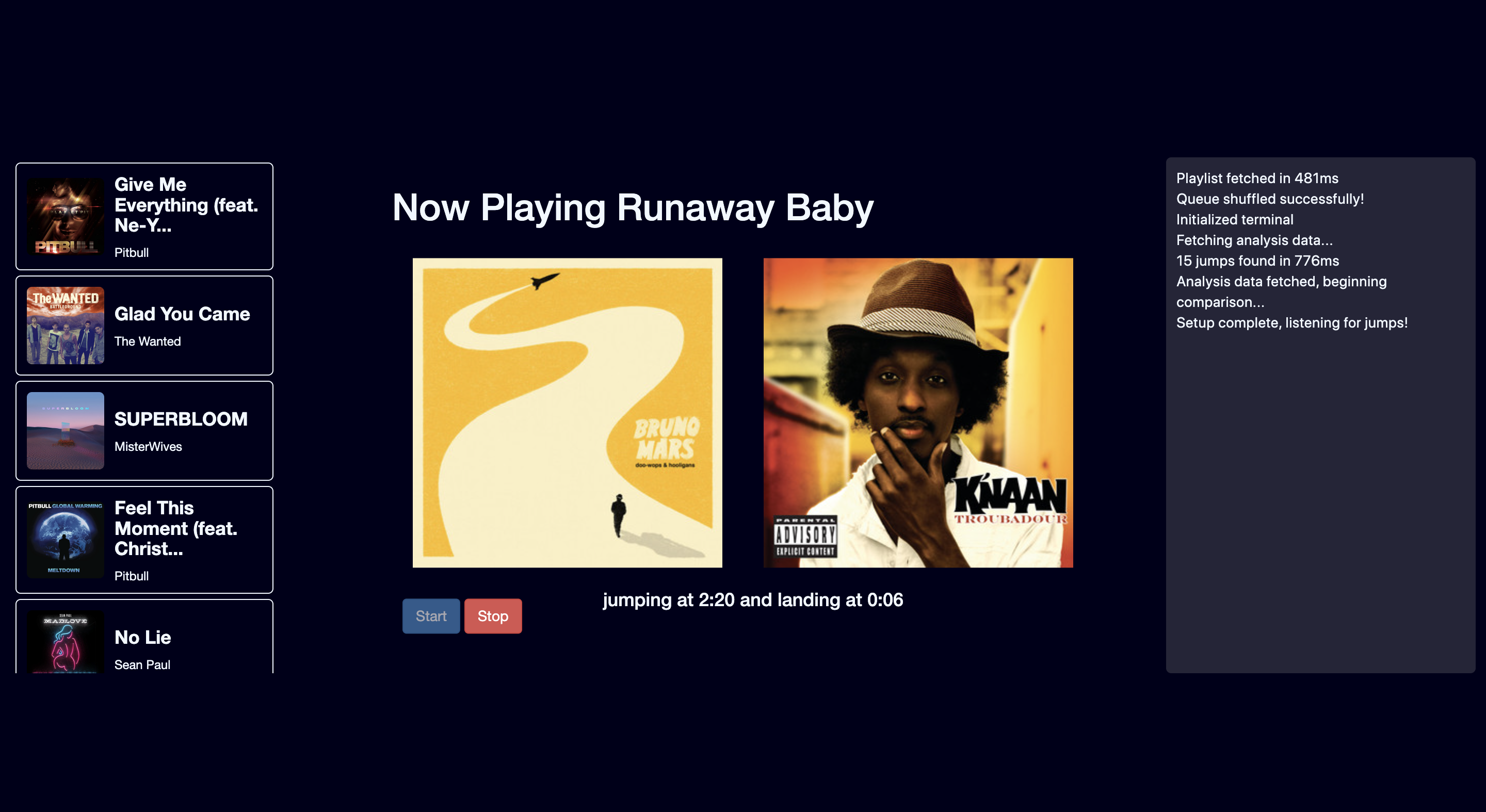

Playlist Assist - Spotify Auto-DJ

Spotify companion app that creates beat-matched transitions within your Spotify playlist. Remotely ...

All Posts